The rapid adjustment required by long standing higher education institutions, grounded in well-established face to face learning practices to online learning in 2020, has created a demand for alternative and innovative assessment approaches. This article will outline an assessment framework that is highly effective and adaptable, suitable for online learning, provides students with more feedback than traditionally provided, and reduces workload on teaching staff.

The rapid adjustment required by long standing higher education institutions, grounded in well-established face to face learning practices to online learning in 2020, has created a demand for alternative and innovative assessment approaches. This article will outline an assessment framework that is highly effective and adaptable, suitable for online learning, provides students with more feedback than traditionally provided, and reduces workload on teaching staff.

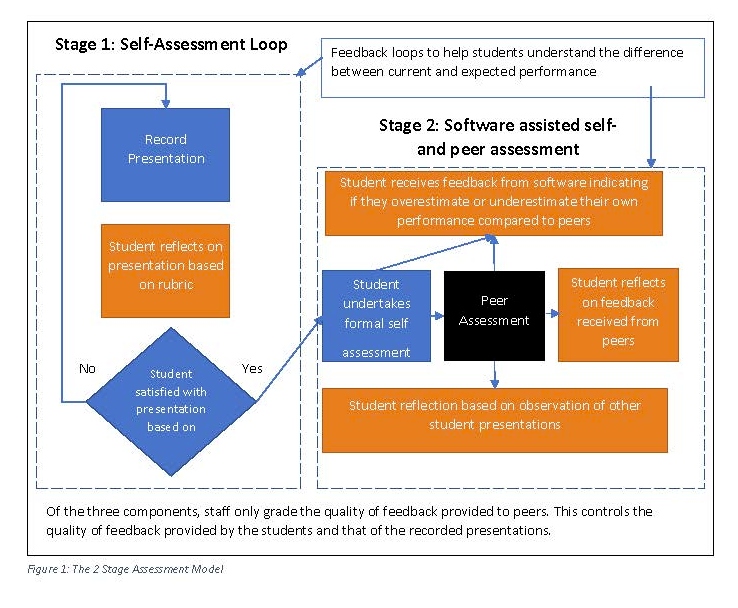

As outlined by Sadler the basis of formative assessment is to use judgements about the quality of student work to shape and improve competency with an immediate-like impact in an efficient and structured manner. This can be achieved by providing students a supportive environment with feedback loops that help them understand the difference between current and expected performance, helping them identify gaps by appropriately incorporating the necessary feedback.

An example of a typical face to face assessment of communication skills includes a student conducting a presentation on a topic of interest (such as a Thesis presentation) to their peers. At the completion they obtain a mark based on a rubric, and this is possibly supplemented with some verbal or written feedback. This feedback, if received and listened to and/or read, may be hard to interpret and may be delivered at an inappropriate time. For example, directly following the presentation when the student is in a state of relief that the presentation is over and is not in an appropriate cognitive state to absorb and retain the verbal feedback. In most cases that feedback will not be reflected and acted upon. Therefore, for feedback to be effective it needs to be in a form that students can respond to in a timely manner and that also encourages absorption and retainment.

To close the loop, students must also develop a competency in evaluation. For students to make sound judgements they need to develop evaluation skills. Evaluation skills are required by professional engineers and sit at the top of Blooms Taxonomy cognitive learning triangle. While it is recognized as an important higher order skill, the development of this competency can be overlooked. Ask yourself, how many assessment tasks used in engineering curriculum at your university provide students with feedback on their ability to evaluate performance and provide quality feedback? In most cases, the student would participate in many peer assessment activities, but never get explicit feedback on how accurate their judgement was, nor how useful their feedback was. By not providing this feedback the student does not gain an opportunity to recalibrate their perception of expectations.

The assessment model presented has been designed to improve communication and evaluation (including feedback) competencies across two stages. Both stages provide feedback loops to help the students understand the difference between current and expected performance. This process is outlined in Figure 1. Key to the process is scalability for large class sizes and reducing staff workload.

Stage One involves the student recording the presentation. For example, if the purpose was designed to improve Thesis presentation skills, the student would record themselves undertaking that presentation. They would then watch the presentation and evaluate their performance against the rubric. If they believed current performance did not meet expected performance, they would use the visual feedback received to make changes and repeat the recording. This process would repeat until the student believed they met expected performance. The use of video feedback is not new and has been shown by many researchers to be highly effective. The increased use of video feedback in sport, reiterates the usefulness of such an approach.

Stage Two involves official assessment activities using specialized software such as Spark Plus. Spark Plus was designed for evaluating team contributions but has been repurposed for this activity. Other similar software platforms may have similar functionality. The first activity is for the student to formally evaluate their own performance against the rubric in the software. Then the students are grouped and told to undertake peer assessment. The key component to the model that makes the system work is that the staff workload is centered on assessing the quality of the feedback students provide to their peers. That is, students get graded on the marks and feedback they provide their peers; providing feedback on their ability to evaluate. This ensures that students undertake this process seriously, incentivizing them to provide valuable and accurate feedback. The positive consequence of this is that staff no longer need to mark the individual presentations due to the quality of feedback. However, teaching staff may need to check presentations or to make alterations when some of the feedback is not consistent or questionable. During the peer assessment, students take note and reflect on their own presentation performance against their peers. At the conclusion of the assessment phase, the software produces a score indicating if they overestimate or underestimate their own performance compared to peers. This provides an opportunity for students to recalibrate their views. An optional component is to create a reflection assessment that students undertake to formally recognize and document how they will use the feedback to improve performance in the future.

Prior to the assessment, students are provided with supportive resources and lectures. This includes presentation techniques, and how to evaluate presentations and provide supportive feedback. My implementations involve uploading and sharing content on YouTube, but other similar platforms can also be used. Privacy settings can be enabled should a student request them.

The two-stage assessment model outlined supports learning and provides substantially more feedback than traditional methods, while also reducing teaching staff workload. This is because the only assessed component by teaching staff is the quality of the peer assessment. By using Spark Plus this becomes a very easy task, taking very little time. Therefore, this approach is highly scalable to any class size.

The scientific basis and evaluation of the approach can be found in this article, showcasing its first use in Thesis presentations. However, the approach has expanded and is adaptable. It has been used in multiple learning scenarios, including improving job interview responses in a professional skills course. Key to changing the outcomes, is to change the marking rubric. For example, a creativity focused rubric was used in a first year subject to encourage modern communication techniques aimed at their peers. An example of what is capable is shown here: https://www.youtube.com/watch?v=DXpTdVXoyrE